Australian organisations and their IT teams may be “missing the point” of artificial intelligence if they focus solely on using generative AI to boost productivity and accelerate existing plans, according to a leading expert in the technology.

Martin D. Adams, an ethical AI entrepreneur and business advisor, told audiences at Sydney’s SXSW Festival in October that some AI applications touted by consulting firms like McKinsey & Company, Accenture, and Deloitte could actually be risky for organisations and miss the true value of AI.

“Their view as I understand it is to help push the idea of AI as helping us achieve business cases that are already approved, to do things we’re already doing, but to do them faster and cheaper,” Adams said.

Calling this productivity framing of AI’s potential the “mad view of AI” and “short-sighted,” he said that, while there was nothing wrong with faster and cheaper, doing so without considering the broader business and societal context may see enterprises risk relationships with customers and communities.

Generative AI’s allure can cause businesses to miss its true purpose

Adams explained that generative AI collapses the gap between an idea and its execution, eliminating much of the time, cost, and effort traditionally required for creation.

“Generative AI plays into the mad view of AI really seductively, and dangerously seductively, if we’re not careful,” he warned.

Generative AI’s appeal can lead businesses into new ventures, but Adams warned that this is not always beneficial in a digital or AI-driven age. For example, in the marketing industry, AI is supercharging the AdTech and social media race for content views, often with the push of a button.

SEE: Organisatinos are facing barriers getting AI into production

“You’ve got people getting promotions on the basis of numbers of views skyrocketing,” Adams noted. “Meanwhile, brand equity and loyalties are all absolutely disappearing because we’ve mechanised production, and it’s really, really dangerous. It’s not actually helping our relationships.”

The biggest problem enterprises in any industry are likely to have is what Adams calls the “informed company bias.” This is a problem where, because of their position, companies think they are more informed than they are and end up being less informed than they need to be.

“They under-invest in the systems, and the mindsets, and the people, and the technology to understand market trends, consumer preferences, and the knowledge to adapt and respond to those,” he explained.

Organisations and IT can use AI to become ‘sensitive’

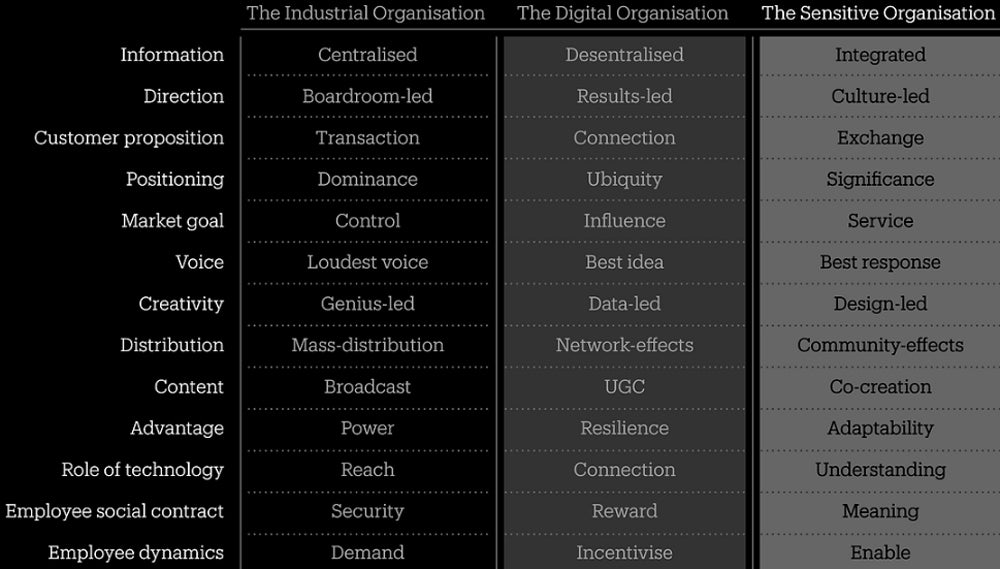

In contrast, the most effective organisations — which Adams calls “sensitive organisations” — are instead using generative AI in tandem with different technologies, including narrow AI, applied AI, and analytics. They are doing this to gain a more holistic identification of unmet needs of communities and customers.

“They [sensitive organisations] understand people the way those people would describe themselves; with all their full dimensionality and complexity and everything else, rather than seeing them as purely sort of commercial entities,” he said.

PREMIUM: How to use AI in business

Adams added that AI has the potential to offer organisations this sensitivity, rather than stagnation. “In business and life, the opposite of sensitive is not strong, resilient, and robust. It’s actually death. It’s actually to be cut off from the reality of what is going on out there,” he said.

How to turn your enterprise into a sensitive organisation

Adams explained that sensitive organisations often use AI to process, integrate, and respond to information in ways that enhance capabilities and competitiveness, rather than simply boosting productivity.

Taking in information

Adams said AI technologies now allow organisations to pull in information from the outside world at scale, depth, and speed. This allows them to better understand real demand, interests, and communities, rather than simply focusing on markets as consumers.

He cited the example of banking institution UBS, which used AI and content analysis to discover that their high-net-worth clients were consuming a significant amount of content related to isolation, loneliness, and mental health struggles. This gave them insights into the unmet needs of this community.

Integrating information

Sensitive organisations tend to integrate the information and insights gathered through AI into the broader organisation. This can allow them to change the shape and function of the company through stakeholders across the business, so it can adapt to the environment.

Adams said L’Oreal’s NYX brand, for example, was able to use AI and content analysis to identify naturally occurring communities and interests around “goth romance, goth horror, and goth comedy.” They then used this information to design a new product line aligned with those community interests.

Responding to information

Adams said sensitive organisations use integrated information to develop a strong “sense and response” capability, where they are more open, receptive, and responsive to change. For example, he said sensitive organisations are unlikely to use AI merely to produce more of the same at a faster rate. Instead, they are likely to leverage it to redefine the problems they face and create more precise problem statements.

IT leaders should focus on psychological safety

Adams recommended organisations use narrow AI “upstream,” not as a production technology, but as a tool to understand demand, interest, community, and unmet needs. Then, generative AI can be used to spread that information throughout organisations, and respond sensitively to the environment.

He also urged IT leaders not to forget the teams and employees they will be working with to deploy AI.

“If you’re a leader of any type, and you’re talking about AI as being in there to create these efficiencies, to automate processes, you’ve got to be aware this might be very, very scary for people in your organisation; AI is a system, but you’re also bringing it into a system,” he cautioned.

Creating psychological safety within teams will actually support AI’s role in the organisation, he said.

“Emphasize the fact AI can unlock things we wouldn’t be able to do but for the existence of AI, and it can enable them to do their best work,” Adams urged. “Having this view is not some diplomatic thing and doesn’t get in the way of adoption; it actually greases the wheels for adoption to create psychological safety.”